Developing a website or a web app can be a challenging experience for the business owner and the developers themselves. With the added pressure of time and cross-browser compatibility, the experience can become overwhelming.

Various approaches, including Agile development and Scrum methodology, can be used to develop a website. Still, having a web development team workflow can save unnecessary stress. By having the workflow set up correctly and with some practical collaboration tools and web design software, it is possible to keep the development process moving.

Using version control systems like Git and GitHub can help maintain order in the codebase repository. This will help avoid confusion about user stories and use cases expectations versus the final product.

Selecting the optimal web development team workflow requires understanding the project’s specific demands and characteristics. Responsive design and ensuring website optimization are essential. It is not simply a matter of filling in a template or using a CMS. Each new project demands the building of a unique and customized framework for every individual client, considering the web application architecture and necessary API integrations.

A handy guide to establishing a web development team workflow with best practices is presented here. These suggestions can be applied to the entire process of website production and deployment in both staging and production environments.

Some of the Issues

In project management, there is a humorous saying: a project can be either good and fast, good and cheap, or fast and cheap. It can never be good, fast, and cheap.

The reality, especially in web development, is much more arduous and complicated. It is generally inadvisable to ignore one of these factors, so a compromise may be necessary. Design-to-development handoff is crucial. Reconciliation needs to be found between the client and the developer.

Clear communication channels between designers and, for example, Webflow agency developers are essential to ensure seamless execution and alignment of the envisioned design with the technical implementation.

Still, this saying accurately captures the factors you need to identify while engaging in web development:

- What will be delivered? (Considering both front-end and back-end development)

- What is the timeframe? (Incorporating sprint planning and task management)

- What are the costs? (Considering server configuration and web hosting costs)

These questions can help to define important elements that are relevant for web development:

- Budgeting

- Return of investment

- Project feasibility (Considering web security protocols)

- Assignment of resources (Including DevOps and full-stack development resources)

- Scheduling

- Marketing

- Project and product risk management.

The current complexity of websites and applications, especially with the need for continuous integration and continuous deployment, has resulted in website development becoming complicated, or even risky. Those simple key questions can become difficult if not virtually impossible to answer, even for the most proficient and adept developer.

How Can Risk Be Mitigated?

Smart developers always think at least one step ahead. With increasing experience, a developer may even be able to oversee the whole project, incorporating automated testing and maintaining performance monitoring. Thinking ahead is a vital aspect of risk management.

These are some potential risk areas:

- Infrastructure

- Backup service

- Load testing

- Error tracking

- Database management and frameworks and libraries selection.

What Are the Solutions?

A clear web development team workflow, combined with Agile development and Scrum methodology, is essential for building good quality websites. Good quality does not necessarily mean costly or lengthy, but it should account for cross-browser compatibility and responsive design.

How can you select the right workflow for your project? Consulting with other web developers or even delving into web design forums is a good start. The problem is that practically every web development agency, whether focused on front-end development or back-end development, has a customized workflow and is ready to highlight the advantages of their approach using their preferred frameworks and libraries. Therefore, it can be tough to separate the wheat from the chaff.

An experienced developer, possibly one skilled in full-stack development, will usually be open to exploring areas outside web development. They may tailor methodologies and workflows from other businesses to suit their needs. They can explain why their approach, which may involve continuous integration and version control using platforms like Git and GitHub, is successful and will have examples of past accomplishments, showcasing user experience (UX) design and user interface (UI) design.

Below is an overview of one of the most popular methodologies, which might integrate aspects of DevOps and web application architecture. The overview will help to understand its value and bearing in website development. It highlights some common processes and project stages in web development, from wireframing to prototyping.

Before Starting a Project

Initial Negotiations

Negotiation starts with a meeting between the client and the developer. The client expresses expectations, demands, user stories, blueprints, and anything else envisioned. These ideas, which might include specific design-to-development handoff expectations, must be clear to the developers. At this point, the client will express what they consider to be the optimal strategy, whether it’s based on a CMS or a more custom approach.

Next, the development team prepares a proposal, describing prospective technology, database management, budget, and time frames. After review and acceptance by the client, the proposal is signed by both parties. The specific sprint planning, starting and end date for the project, should be determined and outlined in the proposal.

It is good practice on the side of the developer to fill the client in on process details. This can include the work process, the collaboration tools that are used, the roles and responsibilities of the primary team members involved. This will help the client develop trust, have a clear picture of the mechanics, and avoid confusion.

Estimate and Schedule

Two of the most important factors to consider in a project are unquestionably cost and time. The preliminary activities, including the task management phase, will help the developer to estimate both aspects of the different development stages.

In a sequential waterfall approach, making these estimates is commonly done at the beginning of a project. This requires a broad, comprehensive estimate with a complete work statement and code review protocols. With the agile model approach, incorporating elements of Kanban board management, this may be done at the start of each sprint.

It is normal that estimates change over the project lifetime. As understanding and insight increase, estimates must be adjusted. This is particularly true in the design and development of complex projects that might involve API integration and web security protocols.

Defining the Concepts

Site Goals

Arguably, this is the most important phase in the web development process. Spending sufficient time on the proper planning at this stage prevents complications in the advanced stages of the project. Definition of the objectives, website architecture, content, deliverables, and role assignment are elements of this phase within the Scrum methodology.

Close cooperation with the client is crucial. Both parties need to agree on clear expectations. This includes the schedule, budget, deliverables, technical requirements, design, and content which has been structured for the target audience with a focus on user experience (UX).

Sitemap and Wireframe

Before mapping the website and discussing front-end development, the client and the developer should have a clear idea of the objective of the project. If this is not the case, then refinements or adjustments need to be made, possibly using version control systems like Git. It would be advisable to clarify any issues before beginning the next stage of the project.

At this point, the scope of the project and the pages included will have been determined, leveraging frameworks and libraries as necessary. The general objective is defined in a single sentence that captures the content of the page.

Now, a beginning is made with an outline of the pages and how they will interact in the final product, ensuring cross-browser compatibility.

- Sitemap: Preparing a sitemap is central in content organization and ties into web application architecture. Constant updating of the sitemap is imperative to keep the project organized and on track.

- Wireframe: The general content of a page needs to be established before the ultimate responsive design and graphics are fixed into place. With wireframes, low fidelity sketches are made of a website or mobile application putting content into place. This also establishes priorities for elements on the page and provides design-to-development handoff document requirements.

The Building Phase

Design and Production

During the design phase, the designer and the programmer work together to make a coherent website, factoring in both user interface (UI) and user experience (UX) design principles.

Once the client has approved a draft, the designer collaborates with the graphic team, ensuring API integration where necessary. In software design, this is described as creating the look and feel of the design. It is achieved by selecting the compression, transparency, and color scheme of the layout, with a keen eye on web accessibility.

The production stage is the moment when the website is actually created. After the layout and design of the site are completed, the site enters the backend development engineering phase. All the individual graphic elements selected by the designer and graphic team are merged, leveraging full-stack development skills, to produce a fully functional site that’s ready for load testing and error tracking.

Website Development

In this phase, the web application architecture and technical development of the website takes place. The programmers write the code, ensuring cross-browser compatibility, the database engineers build the data pipeline models, and the systems engineers set up the servers with a focus on web hosting and server management.

The practical value of the previous preparation steps, including the Scrum methodology, becomes evident, and the developers will have all the information and resources they need, possibly using version control systems like Git. Completed properly, this allows them to quickly and efficiently build, test, and implement all features of the deliverables, ensuring user experience (UX) optimization.

The website development can be split into frontend and backend development. Both have unique characteristics and requirements. Often, a website developer specializes in one or the other, leveraging frameworks and libraries, but in some cases, one skilled individual, possibly a full-stack developer, may develop both.

Data Migration

If there is an already existing website or web service, the information needs to be transferred, ensuring web application integration.

This requires that one of the team members takes time to directly copy and then paste some or all of the information from the old to the new website, factoring in API integration if necessary.

Content Production and Entry

A new website may require the restructuring of old content, ensuring web accessibility or new content will need to be created. Many web development agencies leave content management up to the client.

Depending on the type of client and their familiarity with content management, especially with Content Management Systems (CMS), this can be a staggering concept. To assist with these aspects of website development and maintenance, other agencies offer content production and SEO copywriting service.

Testing and Launch

Quality Assurance Review

This review is still part of the technical stages of the project, ensuring load testing and error tracking are accounted for. It involves two roles of the development team. The reviewer, who analyses the code and modifications, and the one who wrote the code and opened the merge request (the reviewee).

The reviewer analyses the work, looking only at the technical aspects of the code, and ensuring best web development practices are followed.

This review leads to discussions with the writer about specific parts of the code, potential issues, and improvement. After review, the reviewer is also the one that closes the merge request.

Testing, Fixing Bugs and Collecting Feedback

No project is flawless. Although testing, including cross-browser compatibility checks and web application integration, is done throughout the development process, it is impossible to eliminate all issues. This applies to both the web application architecture and the design itself.

Once the website is finished, an extensive review of the content is performed. User experience (UX) and performance are tested to make sure that everything is working properly.

Besides a full technical check, testing is performed to ensure the user’s demands, especially in terms of web accessibility, are adequately met. Custom functionality, including any API integrations, is thoroughly checked. Broken or missing components are detected and fixed.

Regular troubleshooting using version control systems like Git during development minimizes time and effort at this stage.

It is important for the development teams to also be involved in website testing. In this way, all aspects, including Scrum methodology considerations, receive the attention they deserve resulting in an agile and dynamic process.

Once the website has launched, feedback will be generated, often in terms of SEO performance. This feedback allows the developing team to continue improving its product using frameworks and libraries and ensuring load testing.

Website Launch

Features have now been built, tested, and accepted. The marketing team is keen to launch a huge advertisement campaign for the public. Both the client and the web development team, which may include full-stack developers, want to make sure that things go as smoothly as possible.

So, before giving the green light, the developer will have a launch plan ready, defining the launch and the period immediately afterward, potentially leveraging Content Management Systems (CMS).

This plan includes steps to minimize risk. These risks may include data loss, offline time of the website, and an exit strategy to get the old website back online if necessary. Attention is paid to online performance. Test key conversion points such as forms, eCommerce abilities, and ensuring the best web development practices are upheld.

Top Web Design Workflow Tools

Below are the top 12 tools for speeding up web development workflow, helping to enhance user experience (UX):

Project Management

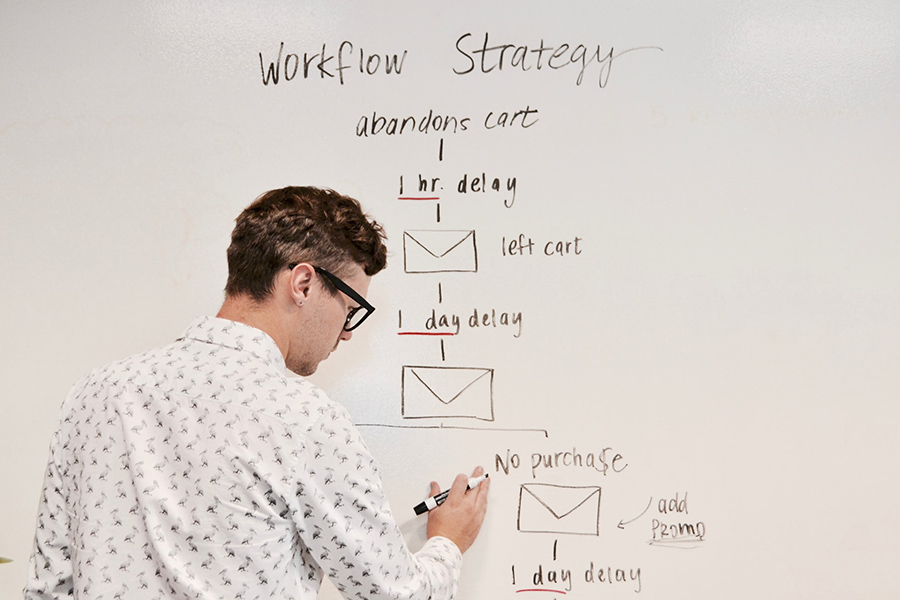

Trello

This is a free project management tool for creating project boards, which can give a fast, visual overview of the entire project.

On these boards, cards can be created, which represent specific tasks within the project.

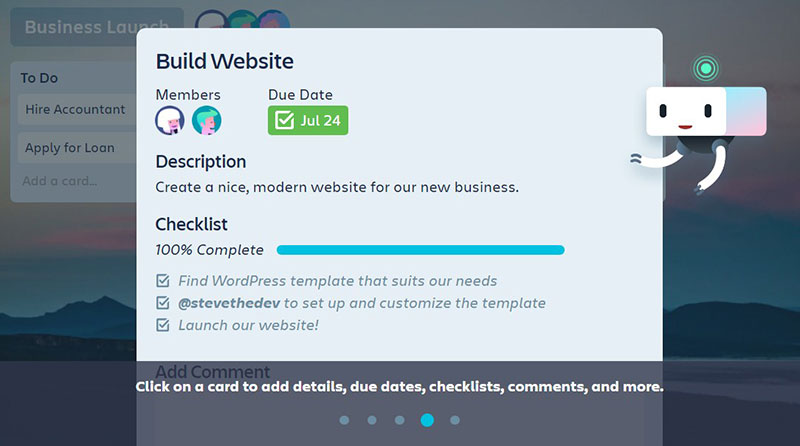

Basecamp

Another popular project management tool for web development is Basecamp.

This tool allows the manager and the team to keep track of every deliverable and enables them to stay focused, ensuring web application integration and efficient workflow.

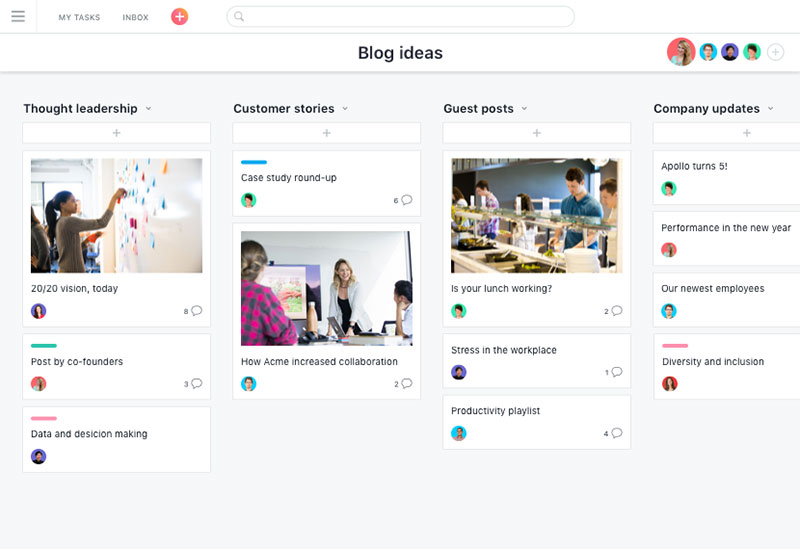

Asana

Asana is a visual management tool that allows for the easy creation of timelines and works well for a medium to large team. It is visual and robust, integrating features from version control systems to a drag-and-drop project dashboard.

It sends reminders and status updates related to web application architecture. It also facilitates communication and file sharing, ensuring smooth web application integration in a straightforward tool.

Wireframing

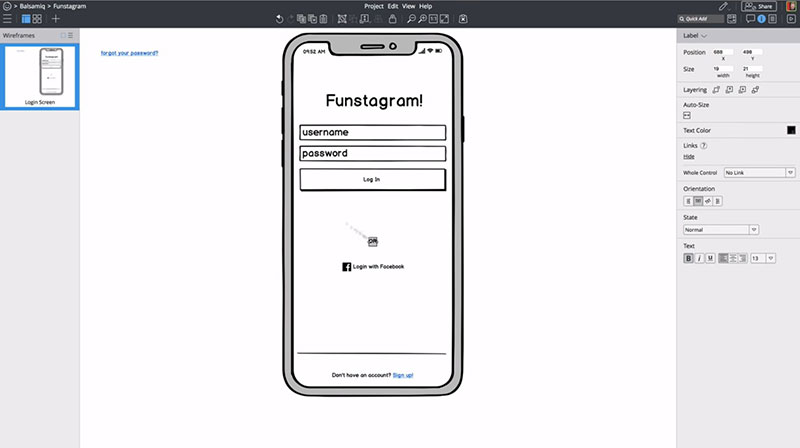

Balsamiq

This is a graphical wireframing tool that aids designers, especially in understanding the user experience (UX).

For presentation purposes, the prebuilt widgets, encompassing principles from web accessibility, can be arranged in a drag and drop editor. This allows for a fast and dynamic discussion of the website design.

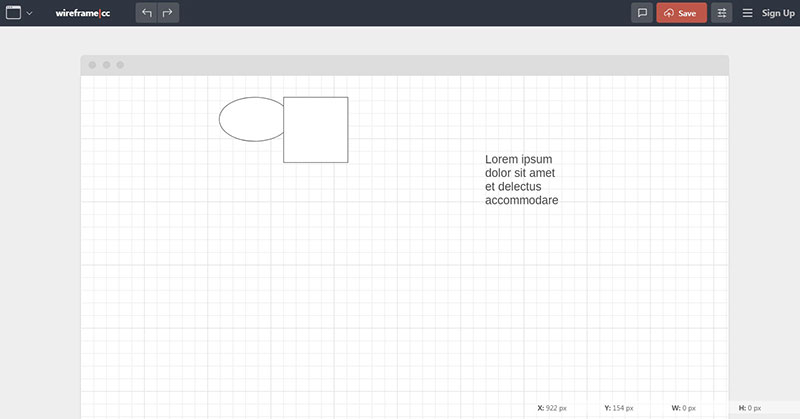

Wireframe.cc

Wireframe.cc offers a simple but unique interface for sketching wireframes.

The toolbars and icons are removed, making it a popular wireframing tool, ensuring that Scrum methodology principles are upheld.

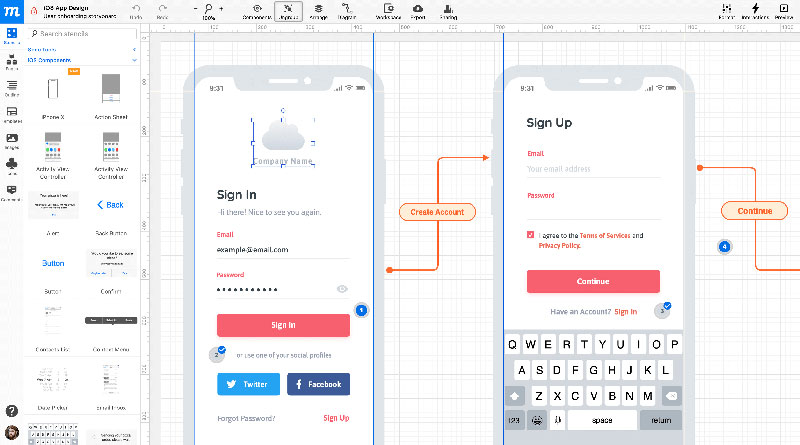

Moqups

Moqups is simple to navigate, incorporating cross-browser compatibility checks with many drag-and-drop features, and does not require a browser extension to operate.

It’s an optimal tool for arranging design presentations related to web development practices.

Design and Production

Github

Github is a platform that assists during the frameworks and libraries phase of a project, helping to manage dynamic code and integrating principles from web application integration.

CodePen

CodePen is an online editor for HTML, CSS, and JS. It works with Github as a collaborative tool, ensuring load testing and helping the different team members, including full-stack developers, to work together.

Gutenberg

Clients want to have the possibility of making future changes to the site or create simple page builds that follow the best web development practices.

Page Builders make creating beautiful websites simple without the need for coding knowledge or experience. Gutenberg is one of the WordPress editor plugins out there that clients can use to edit their pages, ensuring SEO performance.

Testing and Feedback

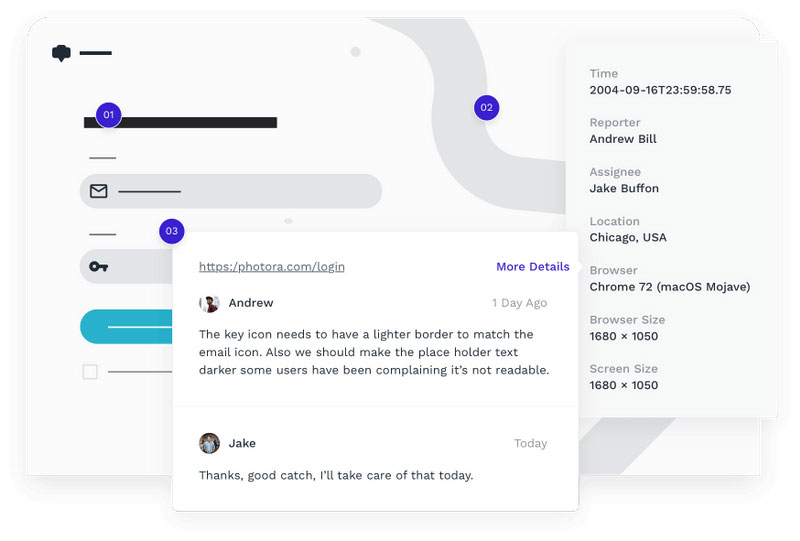

Usersnap

Usersnap is a tracking tool for bugs and fixes, integrating seamlessly with web application architecture.

It is crucial for generating feedback, reporting problems, and changing requests related to web development practices.

It makes interaction with clients, team members, and site visitors easy, promoting effective Scrum methodology and ensuring cross-browser compatibility.

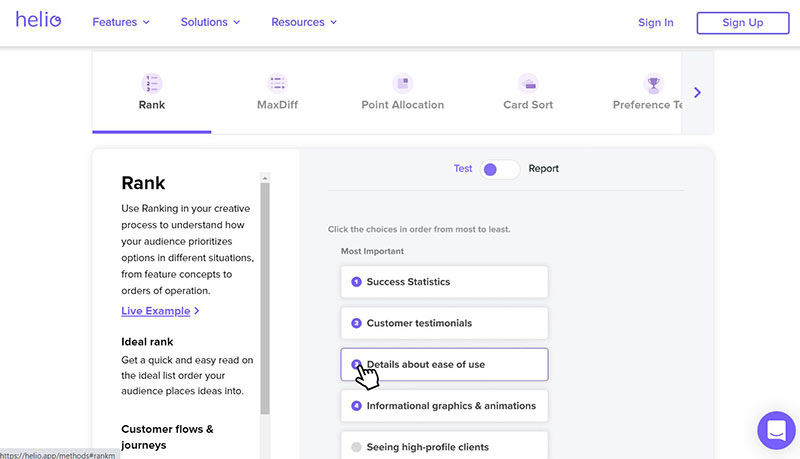

Helio

Helio is a survey platform, crucial for user experience (UX), for asking questions about design, product, and marketing.

It provides quantitative and qualitative answers that align with web accessibility guidelines. It obtains data from panelists, audience, and team members, integrating frameworks and libraries.

This ensures the best design early in the project, adhering to the best web application integration practices.

Cage App

Cage is a tool for online proofing and review, crucial during the version control systems phase.

It ensures load testing is easy, making it a breeze to collaborate and get approval from clients while upholding web development practices.

FAQs about web development team workflow

How do you structure a web development team for the best efficiency?

Well, typically, a balanced team combines front-end and back-end developers, a UX/UI designer, and sometimes a database engineer.

It’s crucial to understand everyone’s roles and make sure there’s clear communication, especially when Scrum methodology is in play. Think of it as assembling a superhero team where everyone has their unique power, but when they come together, magic happens!

What tools do web dev teams commonly use for project management?

Ah, great question! In my experience, tools like Trello, Basecamp, and Asana are pretty popular. These platforms offer visual boards, helping the team keep track of web development practices and tasks. It’s all about keeping things streamlined and ensuring the web application architecture is always on point.

How do teams handle version control?

Version control is the backbone! Most teams lean on GitHub or Bitbucket. These tools allow developers to track changes, collaborate without stepping on each other’s toes, and merge changes into the main project. It’s like having a time machine for your code – super handy if something goes haywire!

What’s the role of a UX/UI designer in the team?

Oh, they’re like the chefs of the web world! UX/UI designers focus on user experience and the overall look and feel of a site.

They make sure the site isn’t just functional but also aesthetically pleasing. With web accessibility in mind, they ensure that everyone, regardless of ability, can navigate the site smoothly.

How often should teams meet and discuss the project?

Regular check-ins are a must! Whether it’s daily stand-ups (a classic in Scrum methodology) or weekly round-ups, it keeps everyone on the same page. It’s all about making sure the web application integration is seamless, and potential roadblocks are discussed before they become massive issues.

What’s the best way to test and review code in a team setting?

Peer reviews, my friend! Using tools like GitHub, team members review each other’s code to catch potential issues.

This cross-browser compatibility check ensures the site works everywhere. The feedback process becomes a dynamic dance of web development practices and constructive criticism.

How do you deal with disagreements within the team?

Ah, the age-old issue! Open communication is key. Encourage team members to voice concerns and use disagreements as an opportunity to grow and innovate.

When your frameworks and libraries clash (metaphorically speaking), remember the common goal: to create an awesome product.

What role does client feedback play in the team’s workflow?

It’s monumental! After all, we’re building for them. Regular feedback ensures the project aligns with client expectations.

Plus, this two-way dialogue can shed light on aspects the team might’ve missed, fine-tuning the web application architecture to perfection.

How do teams ensure websites are accessible to all users?

Web accessibility is HUGE! It’s not just about doing what’s right; it’s also about reaching a wider audience.

Teams usually follow established guidelines like WCAG and use tools to test sites. Remember, a site that everyone can use is always more successful.

How do you know when a project is ready to launch?

The million-dollar question! After rigorous testing, client approval, and ensuring load testing and web application integration are spot-on, it’s go-time.

But always be ready for post-launch tweaks, because, as we know, the digital world is ever-evolving.

How does the team approach user experience and user interface design during the development process?

The team used a user-centered design process, which entails gathering user feedback, designing personas, and developing wireframes and prototypes, to approach user experience and user interface design during the development process.

In order to make sure the website is user-friendly and fits the needs of the target audience, the team also conducts usability testing and iterative design. To guarantee a uniform and useful user experience across the entire website, the team may additionally work with a UX designer or expert.

To ensure that the website is accessible to visitors with disabilities, the team may also take into account accessibility best practices, such as offering alternative language for images and guaranteeing that the website can be navigated using a keyboard. Using web accessibility testing tools can help you a lot here.

Ending thoughts on the web development team workflow

Web development projects require the collaboration of many individuals. These people come from different disciplines and engage in a wide range of activities related to web application architecture.

Getting to the forefront requires combining the right tools with the right set of techniques. Having a motivated team of people with the necessary skills and experience in web development practices is the first step. Guiding this team with the best Scrum methodology and web development workflow is what will make it work.

Every team member is necessary for developing the product by contributing to achieving and optimizing the frameworks and libraries within the workflow. The success and efficacy of the workflow depend on the experience and cooperation of the team members.

Some tools to achieve this are Retrospectives and Code Review. At the site level, holding meetings with multiple teams can be useful, especially when considering cross-browser compatibility.

The resulting constructive feedback can be used to analyze the web application integration and overall workflow. Having a stable team and applying these techniques will guarantee improvements with every job run, ensuring the best in web accessibility.

Continuous feedback and communication are essential elements in the delivery of the final product. There is a mountain of information and opinions within the field of web development. With the growing complexity of websites and applications, and the demands for load testing, the help and feedback available by using online tools, tailored for user experience, will be more than welcome.

If you enjoyed reading this article on web development team workflow, you should check out this one about how to create a process.

We also wrote about a few related subjects like web development team structure, team as a service and dedicated development team.

- 5 Ways to Incorporate AI Into Your Project Management Workflow - April 18, 2024

- The Role of AI in Personalizing Employee Training - April 18, 2024

- Legal Labyrinth: What Happened to Atrium? - April 18, 2024