Picture this: you’ve got mountains of data piling up, waiting to be sifted through for those golden insights. That’s where Hadoop usually jumps in, right? But what if I told you there’s more than one sherpa ready to guide you through this data Everest? Hadoop alternatives are lining up, and they’ve got their climbing gear on point.

In this world where data rules, sticking to one path can mean missing out on the scenic routes. We’re diving into the wide array of options beyond Hadoop. Here, scalability meets agility, and big data processing gets a makeover.

By the time you’re done here, you’ll have the lowdown on platforms that are reshaping the big data landscape. You’ll learn about Apache Spark, which brings the heat with in-memory processing, and how cloud-based data solutions can sky-rocket your data analytics game.

Get set to explore the cool kids of the data block, from real-time analytics to distributed database management systems that turn your big data into big action.

Join me on this trailblazing adventure. And hey, don’t sweat the tech lingo; I’ve got you covered with byte-sized insights on each twist and turn!

Hadoop alternatives

| Hadoop Alternative | Primary Use Case | Data Model | Deployment | Special Features |

|---|---|---|---|---|

| Apache Spark | General data processing | In-memory computing | Standalone, on YARN, Mesos | Machine learning, SQL, streaming |

| Apache Flink | Stream processing | Streaming-first engine | Standalone, on YARN, Mesos | Real-time processing, fault tolerance |

| Databricks | Unified analytics platform | Collaborative workspace | Cloud-based | Machine learning, real-time analytics, optimized for cloud |

| Google Cloud Dataflow | Stream and batch processing | Fully managed service | Cloud-based (GCP) | Auto-scaling, integration with Google Cloud |

| Amazon EMR | Big data frameworks | Hadoop ecosystem | Cloud-based (AWS) | Cost-effective, scalable, flexible data processing |

| Presto | Interactive data queries | SQL query engine | Any environment with Java | High-speed queries, works with various data sources |

| Snowflake | Data warehousing | Cloud data platform | Cloud-based | Data sharing, strong security protocols |

| Vertica | High-speed analytics | Columnar storage | On-premises, Cloud | Machine learning at scale, high-performance database |

| ClickHouse | OLAP queries | Column-oriented DBMS | Standalone, cloud | Real-time and historical data analysis, linear scalability |

| Cassandra | Large scale applications | Decentralized, wide column store | Standalone, cloud | Fault tolerance, linear scalability, decentralized |

| Couchbase | Interactive applications | Document and key-value store | On-premises, cloud | Flexible data model, full-text search, real-time analytics |

| Riak KV | Key-value data storage | Key-value store | On-premises, cloud | High availability, easy scalability |

| Druid | Analytics on event-driven data | Column-oriented | On-premises, cloud | Real-time ingestion, fast OLAP queries, horizontal scaling |

| TimescaleDB | Time-series data | SQL-based time-series | On-premises, cloud | Time-series optimization, scalable, continuous aggregates |

| InfluxDB | Time-series data | Time-series database | Standalone, on InfluxCloud | High write throughput, visualization and monitoring |

| Greenplum | Analytics and BI | MPP data warehouse | On-premises, cloud | Analytics at petabyte scale, open source, based on PostgreSQL |

| Hazelcast | In-memory computing | In-memory data grid | On-premises, cloud | Distributed caching, streaming, and computing |

Apache Spark

Apache Spark lights up big data processing with its in-memory speed, offering a one-stop hub that crunches numbers at lightning speed. It’s built for those who can’t afford to wait, making it a stellar choice for analytics, machine learning, and a ton more.

Best Features

- Lightning-fast data processing

- Supports SQL, streaming, and complex analytics

- Robust machine learning library

What we like about it: Apache Spark’s in-memory computing is a game-changer. It processes data at such breakneck speeds, you’re done analyzing before the coffee’s even brewed. Perfect for when time isn’t just money, it’s everything.

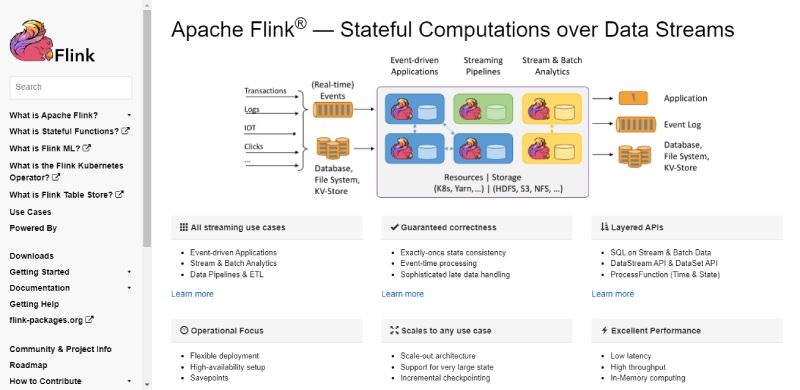

Apache Flink

Flink dances on the line between data processing and outright sorcery. Real-time stream processing? Batch processing? This platform does it with a flourish, serving up consistent accuracy no matter the hustle.

Best Features

- Exceptional stream processing capabilities

- Fault-tolerant and highly scalable

- Detailed monitoring and logging

What we like about it: The real-time data processing prowess of Flink is nothing short of magical. It’s like having a crystal ball that not only predicts the future but also understands the past, in real-time.

Databricks

Databricks is where the data wizards come to play. They’ve laid out the red carpet for big data analytics and made friends with AI to offer a truly unified data platform. Collaborative, cloud-savvy, and studded with analytics tools, it’s a beacon of productivity.

Best Features

- Collaborative workspace

- AI and machine learning integration

- Optimized for cloud platforms

What we like about it: Their collaborative environment is the cherry on top. You’ve got everyone from data scientists to business analysts brainstorming in one space, making it a data democracy where insights reign supreme.

Google Cloud Dataflow

Google Cloud Dataflow strides in with a cape, ready to battle complexities in data processing. Automating every twist and turn, it’s setting the bar high for stream and batch data processing in the cloud.

Best Features

- Fully managed service

- Auto-scaling and performance optimization

- Seamless integration with Google Cloud services

What we like about it: The auto-scaling feature is a crowd-pleaser, waving goodbye to manual tweaking. Resources flex up or down as your data does the tango, trimming costs and keeping performance in tiptop shape.

Amazon EMR

Strap in for a ride with Amazon EMR – a cloud-native sheriff that wrangles big data without breaking a sweat. It plays nice with the entire Hadoop ecosystem and adds more ammo with additional tools.

Best Features

- Easy integration with Hadoop ecosystem

- Cost-effective with pay-as-you-go pricing

- Versatile data processing capabilities

What we like about it: Its cost-effectiveness steals the show. Pay for what you need, scale without fretting over expenses, and optimize that budget like a financial wizard.

Presto

Presto is that cool kid that makes big data queries seem like child’s play. Engineered for interactive analytics, it’s all about dishing out answers faster than you can say “query”.

Best Features

- Super-fast query performance

- Works with a variety of data sources

- Easy to scale and maintain

What we like about it: The sheer speed at which Presto blitzes through queries is jaw-dropping. It has the agility to leap across various data sources in a single bound – making it a superhero in the world of quick-fire data analytics.

Snowflake

Snowflake doesn’t just store your data; it turns it into a powerhouse of insights with its unique architecture. Seamless data sharing and robust security protocols mean your data isn’t just smart; it’s also safe. Additionally, Snowflake offers seamless data replication capabilities, allowing you to easily replicate data from a PostgreSQL database to Snowflake for efficient and real-time data integration. Plus, it’s got some nifty security features, too! You’ve gotta check out Snowflake.

Best Features

- Data sharing capabilities

- Strong security and compliance

- No hardware or software to manage

What we like about it: The data sharing feature stands out, making silos a thing of the past. Collaboration soars and walls come down, all while your data remains snug and secure under Snowflake’s watchful eye.

Vertica

Vertica dives deep into data analytics, offering stone-cold reliable storage and speedy queries. A touch of SQL and a dash of machine learning make this a top-shelf choice for extracting insights.

Best Features

- Rapid query execution

- High-performance analytics database

- Machine learning at scale

What we like about it: Vertica’s knack for rapid-fire query execution has won hearts. Information is power, and this platform delivers that power at the snap of your fingers, transforming data into actionable insight pronto.

ClickHouse

Feast your eyes on ClickHouse, where OLAP (Online Analytical Processing) gets a turbo boost. Perfectly suited for real-time query processing, this platform can handle the hustle and bustle of heavy loads without flinching.

Best Features

- Real-time query processing

- Column-oriented database management

- Linear scalability

What we like about it: Its columnar storage system turns heads, with efficiency so seamless it feels like your data is gliding on ice. Crunch numbers galore and watch ClickHouse serve up answers in a flash.

Cassandra

Cassandra struts into the room, flexing its ability to manage enormous amounts of data with the ease of a seasoned pro. It’s robust, distributed, and can take a failure on the chin without breaking a sweat.

Best Features

- Exceptional fault tolerance

- Linear scalability

- Decentralized system

What we like about it: Cassandra’s fault tolerance is the talk of the town. Want to keep your data safe and always accessible, despite hiccups? Cassandra’s your guardian, proving that in the digital world, resilience is king.

Couchbase

With a wink and a smile, Couchbase offers a dynamic approach to data. Imagine a hybrid creature that’s both a document database and a key-value store. Pretty neat, right? This combo means versatility and performance get a big thumbs up.

Best Features

- Flexible data models

- Full-text search and real-time analytics

- Easy scalability

What we like about it: Flexibility in data modeling steals the spotlight. Couchbase molds itself to fit your data, rather than the other way around. It’s like having a database tailored to your business’s unique contours.

Riak KV

Riak KV is that quiet achiever in the corner, a distributed key-value database that’s all about reliability and simplicity. It’s designed with redundancy and fault tolerance at its core, making it a reliable data sidekick in a volatile world.

Best Features

- High fault tolerance

- Easy to operate and scale

- Convergent replication techniques

What we like about it: The convergence replication technique Riak KV uses is smooth. It means less worrying about conflicts or data getting lost in translation. Peace of mind? Check!

Druid

Druid swoops in like a mythical creature, built for lightning-fast queries on large, complex datasets. Pair that with real-time data ingestion, and you have a platform that’s all about delivering insights at the speed of thought.

Best Features

- Real-time data ingestion

- Quick slice-and-dice analytics

- Horizontal scaling

What we like about it: Speedy queries are Druid’s claim to fame. It gives you the answers you need, practically as you’re asking the questions. For data-driven decisions at a moment’s notice, Druid’s your man… err, mythical data beast.

TimescaleDB

TimescaleDB – the rebel in the database world – merges the reliability of SQL with the scalability of NoSQL. It’s the go-to for working with time-series data, and honestly, it makes time-series queries look like a walk in the park. Plus, you get all the goodness of PostgreSQL, like ACID compliance and SQL IDE support.

Best Features

- Optimized for time-series data

- Combines SQL familiarity with NoSQL scalability

- Massive parallel processing

What we like about it: Its mastery of time-series data is unmatched. TimescaleDB weaves through timelines with the precision of a historian and the clarity of a clairvoyant, making it a time-series data powerhouse.

InfluxDB

InfluxDB is that cool gadget everyone must have, specialized in time-series data. It writes and crunches time-stamped data as if it’s got a direct line to Father Time himself.

Best Features

- Dedicated time-series database

- Built-in data visualization and monitoring

- High write throughput

What we like about it: InfluxDB’s high write throughput is like watching a data sprinter win gold. There’s no lag, just smooth, efficient, and super-fast data recording that keeps up with the ticks of time.

Greenplum

Greenplum strolls in and sets up shop, promising a database that’s big on analytics. It’s the big brain that solves equally big questions, making petabyte-scale data analytics seem less like a behemoth task and more like a regular day at the office.

Best Features

- Petabyte-scale data warehousing

- Advanced analytics

- Open-source and based on PostgreSQL

What we like about it: Its petabyte-scale data warehousing is a nerd’s dream. With Greenplum, handling massive datasets isn’t just possible; it’s a performance worth a standing ovation.

Hazelcast

Hazelcast is all about going fast and staying flexible. It’s an in-memory computing platform that ditches the disk for a life in the fast lane. With this, computing gets a nitrous oxide boost, and data processing times drop like they’re hot.

Best Features

- In-memory data grid

- Stream processing capabilities

- Embedded distributed computing

What we like about it: The in-memory data grid is Hazelcast’s secret sauce. It keeps things zippy and smooth, serving up data transactions and analytics at breakneck speeds. Ready, set, fast!

FAQ on Hadoop alternatives

What exactly are Hadoop alternatives?

Think of them like different flavors of your favorite ice cream. They’re other data processing tools that handle massive data sets. We’re talking Apache Spark, Google Cloud Dataproc, or even some sleek cloud-based data solutions. They offer unique features and can sometimes run circles around Hadoop when it comes to speed or ease of use.

Why would someone look for an alternative to Hadoop?

Picture Hadoop as a sturdy old truck — reliable but a bit clunky. Sometimes, you need a sports car, or a motorbike, or maybe even a jet. That’s where Hadoop alternatives come in. They can be faster, easier to manage, more cost-effective, or just a better fit for certain big data processing tasks.

Can Hadoop alternatives handle big data as effectively?

Absolutely. In fact, some are born for the spotlight when handling big data. Look at Apache Spark — it’s built for lightning-fast real-time analytics. Then there’s Apache Flink and Elasticsearch, flexing muscles in stream processing and search. So yes, effectiveness is their middle name.

What are some popular Hadoop alternatives?

On the popular crew, we’ve got Apache Spark, stealing the show in speed and simplicity. Then there’s Cloudera and MapR, making waves with their tailored ecosystems. Don’t forget Microsoft Azure HDInsight; it’s a heavy hitter for those wedded to Microsoft services.

Is it difficult to transition from Hadoop to its alternatives?

Transitioning has its moments, you know? It’s like learning to switch from manual to automatic. If your team’s got a solid grip on big data concepts and aren’t afraid of a little learning curve, they’ll adapt. Some systems even pride themselves on seamless transitions, offering similar environments with extra perks.

How do the costs of Hadoop alternatives compare?

So, diving into costs is like unearthing buried treasure – it varies. Some alternatives might be pricier upfront but offer savings in maintenance or scaling down the line. Cloud-based data solutions, for example, offer pay-as-you-go models. It’s different strokes for different folks, with costs swinging based on your specific needs.

Can Hadoop alternatives integrate with existing data ecosystems?

For sure. Most of these alternatives are not just ready to mingle; they’re the life of the data party. They can slide into existing data analytics platforms and dance well with NoSQL databases and whatnot. Apache Spark and others offer connectors and APIs for smooth integration.

What about support and community for Hadoop alternatives?

Oh, the support vibes? They’re strong. Single-origin tools like Apache, Cloudera, and others boast big communities — loads of brains ready to tackle issues. Plus, many options throw in dedicated support if you cozy up to a paid package. So yeah, you’re pretty much backed up.

Are there any industry-specific Hadoop alternatives?

You bet. There are specialized tools that fit industries like a glove. Financial sectors might kiss Apache Flink for its fraud detection capabilities. Healthcare’s got a thing for alternatives offering tight security for sensitive data. Industry-specific big data needs? There’s likely an alternative that caters to them.

Do Hadoop alternatives offer better performance?

That’s like asking if a sports car goes faster than a minivan — usually, yeah. Alternatives like Apache Spark have a rep for top-notch performance, especially with in-memory processing. But remember, ‘better’ is in the eye of the beholder, dependent on the type of data work you’re pulling off.

Ending thoughts

So, we’ve reached the end of our digital hike through the landscape of Hadoop alternatives. It’s clear, right? There’s a whole world out there buzzing with potential.

Hadoop’s been a trusty sidekick in the big data journey, doing the heavy lifting with the grace of an elephant. But the new kids on the block? They bring fresh tricks to the table.

From Apache Spark’s electric speed to cloud-based data solutions tailoring their services like bespoke suits. For data-driven whizzes aiming for the stars, these platforms offer personalized rockets for the trip.

Remember, it’s all about picking the right tool for the right job. Like a master chef chooses their knives, so should you select your data platform. Just keep those business goals locked in your sights, and these Hadoop alternatives will help you shoot for the moon, through swathes of data and beyond.

Take a breath, choose your path, and let the data revolution roll on.

If you liked this article about Hadoop alternatives, you should check out this article about Next.js alternatives.

There are also similar articles discussing Bootstrap alternatives, React alternatives, Java alternatives, and JavaScript alternatives.

And let’s not forget about articles on GraphQL alternatives, jQuery alternatives, Django alternatives, and Python alternatives.

- Vue Component for Inline Text Editing: Enhancing Web Interfaces - April 25, 2024

- User Experience Testing: Apps Like UserTesting - April 24, 2024

- User Engagement Metrics That Matter: What To Track And Why - April 24, 2024